FOR those who reckon that brain-computer interfaces will never catch on, there is a simple answer: they already have. Well over 300,000 people worldwide have had cochlear implants fitted in their ears. Strictly speaking, this hearing device does not interact directly with neural tissue, but the effect is not dissimilar.

A processor captures sound, which is converted into electrical signals and sent to an electrode in the inner ear, stimulating the cochlear nerve so that sound is heard in the brain. Michael Merzenich, a neuroscientist who helped develop them, explains that the implants provide only a crude representation of speech, “like playing Chopin with your fist”. But given a little time, the brain works out the signals.

That offers a clue to another part of the BCI equation: what to do once you have gained access to the brain. As cochlear implants show, one option is to let the world’s most powerful learning machine do its stuff. In a famous mid-20th-century experiment, two Austrian researchers showed that the brain could quickly adapt to a pair of glasses that turned the image they projected onto the retina upside down. More recently, researchers at Colorado State University have come up with a device that converts sounds into electrical impulses. When pressed again

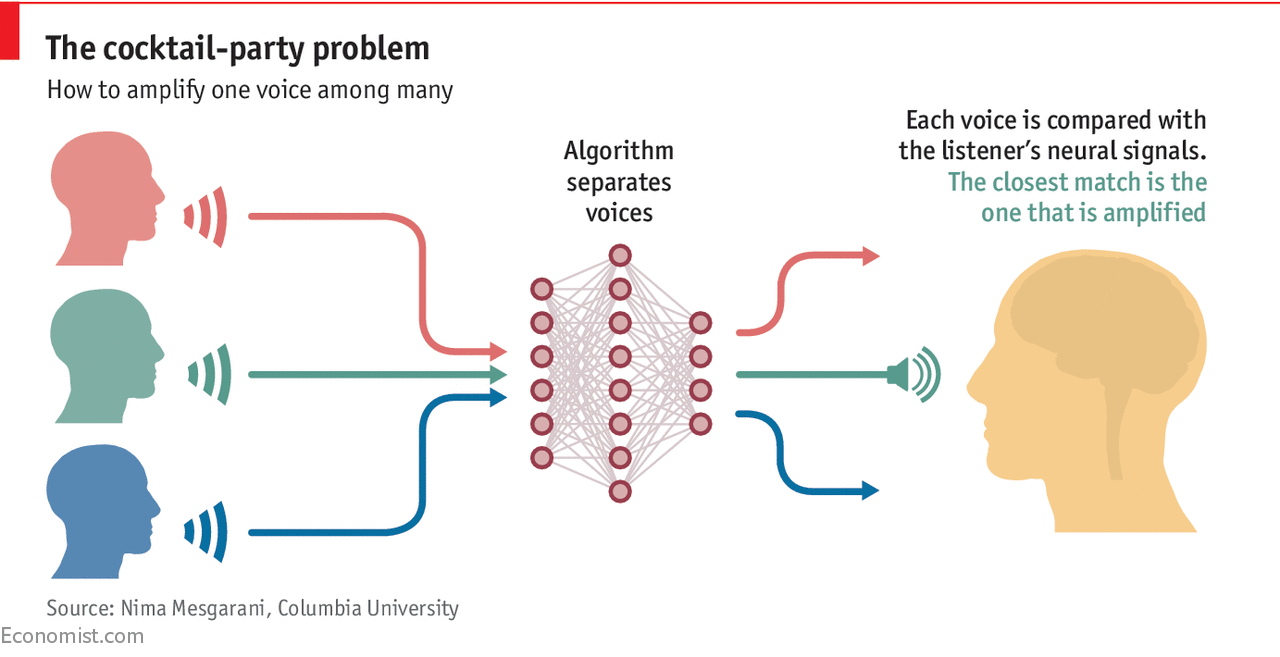

The brain, then, is remarkably good at working things out. Then again, so are computers. One problem with a hearing aid, for example, is that it amplifies every sound that is coming in; when you want to focus on one person in a noisy environment, such as a party, that is not much help. Nima Mesgarani of Columbia University is working on a way to separate out the specific person you want to listen to.

The idea is that an algorithm will distinguish between different voices talking at the same time, creating a spectrogram, or visual representation of sound frequencies, of each person’s speech. It then looks at neural activity in the brain as the wearer of the hearing aid concentrates on a specific interlocutor. This activity can also be reconstructed into a spectrogram, and the ones that match up will get amplified (see diagram).

Algorithms have done better than brain plasticity at enabling paralysed people to send a cursor to a target using thought alone. In research published earlier this year, for example, Dr Shenoy and his collaborators at Stanford University recorded a big improvement in brain-controlled typing. This stemmed not from new signals or whizzier interfaces but from better maths.

One contribution came from Dr Shenoy’s use of data generated during the testing phase of his algorithm. In the training phase a user is repeatedly told to move a cursor to a particular target; machine-learning programs identify patterns in neural activity that correlate with this movement. In the testing phase the user is shown a grid of letters and told to move the cursor wherever he wants; that tests the algorithm’s ability to predict the user’s wishes. The user’s intention to hit a specific target also shows up in the data; by refitting the algorithm to include that information too, the cursor can be made to move to its target more quickly.

...[ Continue to next page ]

Share This Post